Automating Linux, Hadoop, AWS, and Docker with a Menu-Driven Program

Technology is all about making life easier, right? But managing complex systems like Linux, Hadoop, AWS, and Docker often requires specialized skills and repetitive tasks. What if you could simplify all of its spinning up AWS instances, managing Docker containers, or setting up Hadoop clusters using a single terminal-based Python program? Sounds like magic, but it’s achievable.

In this blog, I’ll share my journey of creating a menu-driven automation program that seamlessly integrates these powerful technologies, along with real-life examples and practical tips to inspire your own automation projects.

Why Automate?

Automation isn’t just for reducing manual effort; it’s about saving time, eliminating errors, and enabling focus on more creative and impactful tasks. Whether you’re an IT professional setting up servers daily or a student experimenting with Docker and Hadoop, this program can be a game-changer.

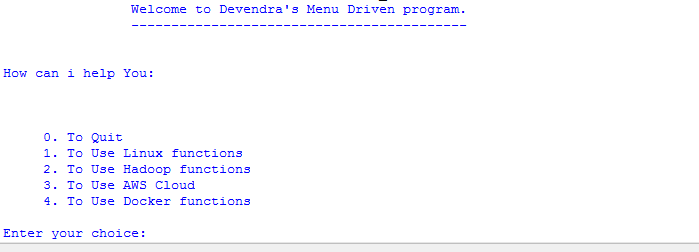

Meet the Automation Menu

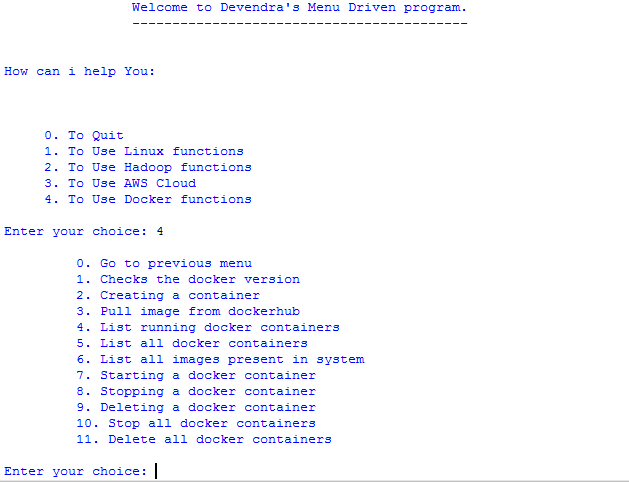

The program I developed uses a simple Python script (python_menu.py) that brings the power of automation to your fingertips. When you run it, you’re greeted with a menu that lets you perform operations on:

- Linux: Manage basic operations effortlessly.

- Hadoop: Set up and manage big data solutions.

- AWS: Handle cloud computing tasks like managing EC2 instances.

- Docker: Simplify containerization workflows.

This program bridges the gap between specialized knowledge and accessibility, letting even newcomers execute powerful commands with ease.

Pre-Requisites

Before you dive in, here’s what you need to have set up:

- Python 3: The backbone of the program.

- Linux OS: The platform this script operates on.

- Hadoop Configuration: For big data tasks.

- AWS CLI: For managing AWS services.

- Docker Installed: To handle containerization.

What Can It Do?

Here’s a deeper look at what this program brings to the table:

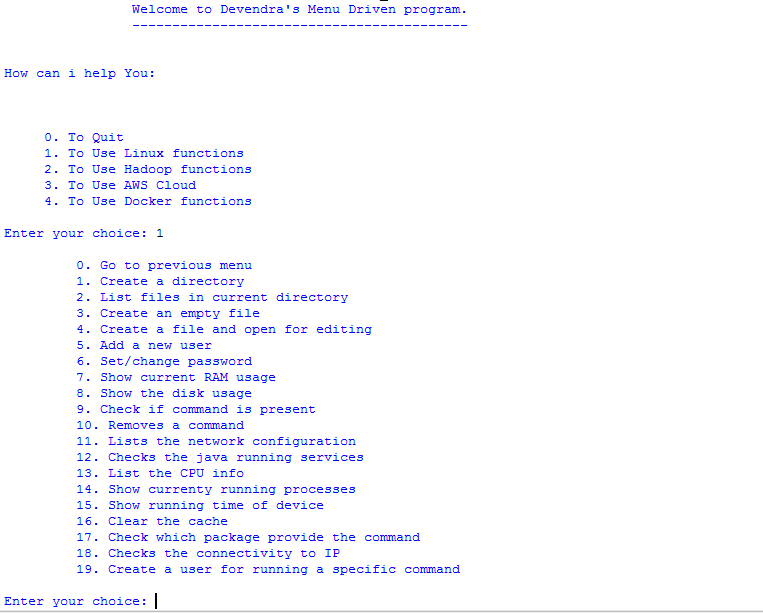

1. Linux Operations

Linux is the foundation of most server environments, and this program simplifies basic operations.

Example: Need to check disk usage, list files, or set permissions? Just select the corresponding menu option, and you’re done.

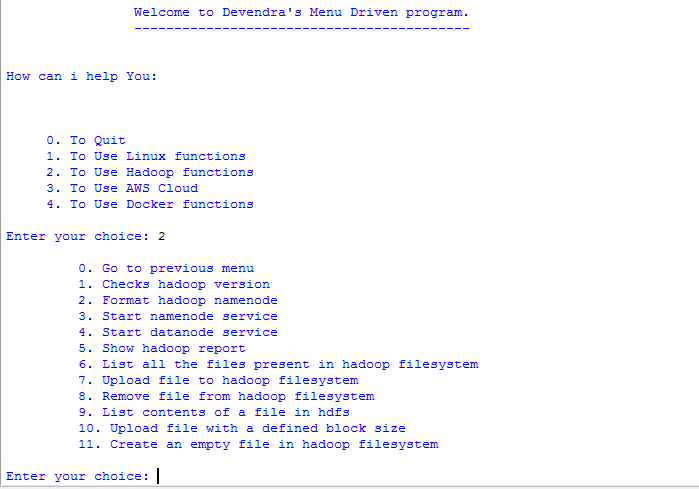

2. Hadoop Operations

Big data can be intimidating, but Hadoop’s distributed framework makes handling massive datasets possible.

Practical Use Case: You can set up a Hadoop cluster, format the namenode, or check the cluster’s health—all through this menu.

Imagine a data engineer setting up a Hadoop environment for processing terabytes of log data. Instead of typing commands repeatedly, they select options from this menu and finish in minutes.

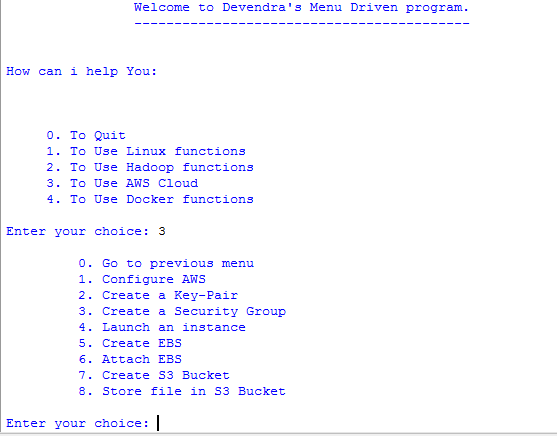

3. AWS Operations

Cloud computing is the present and the future.

Highlights:

- Launch EC2 instances effortlessly.

- Start, stop, or terminate servers.

- Configure S3 buckets for storage.

Scenario: A startup deploying a web application can use this menu to quickly launch and configure their cloud servers without manually navigating AWS’s dashboard.

4. Docker Operations

Containerization is essential for modern development workflows. Docker’s lightweight containers isolate applications, making deployment consistent and scalable.

Example: Developers can build images, run containers, and monitor their states—all by selecting options in the program.

Why Build a Menu Program?

Creating this menu-driven program wasn’t just about simplifying tasks; it was also about bringing together diverse technologies in one cohesive interface.

Here’s what makes it special:

- Ease of Use: No need to memorize commands or scripts.

- Error-Free Execution: Automating repetitive tasks reduces human error.

- Time-Saving: Quickly perform complex tasks with minimal effort.

- Scalability: As I find new technologies to automate, adding them to this program is straightforward.

Behind the Scenes: Developing the Program

This program is a Python-based project that uses libraries like subprocess to execute commands in the terminal. Each menu option corresponds to a predefined function, which runs specific system commands or scripts for the selected technology.

Challenges Faced

- Integrating Technologies: Ensuring seamless operation across Linux, Docker, Hadoop, and AWS required detailed testing.

- Error Handling: Building robust error-checking mechanisms for unexpected failures was essential.

- User-Friendly Design: Making the menu intuitive and non-intimidating for beginners was a priority.

-

Magic of Caching Netflix uses To Hold Attention

How Caching Makes Netflix Faster and Easier Netflix is synonymous with seamless streaming and personalized entertainment. Achieving this level of user satisfaction isn’t just about great content; it’s about delivering that content without delay. One of Netflix’s secret weapons in this endeavor is EVCache, a distributed in-memory key-value store that powers key functionalities across the…

-

What Is Apache Spark & Why Is It Important for Big Data?

Getting Started with Apache Spark: The Engine Powering Big Data Analytics In today’s data-driven world, businesses generate massive amounts of information every second. From tracking customer purchases to analyzing social media trends, the need to process, analyze, and act on data in real time has never been greater. This is where Apache Spark steps in—a…

Real-Life Applications and Benefits

1. For Students and Beginners

Imagine being a computer science student exploring cloud computing. Instead of wrestling with AWS’s interface or Docker commands, you can focus on learning concepts while the menu handles the heavy lifting.

2. For IT Professionals

Picture an IT admin managing multiple servers daily. With this tool, they can automate routine tasks, leaving them more time for strategic projects.

3. For Developers

Developers can use the program to quickly spin up containers, test deployments, or simulate distributed systems using Hadoop clusters.

Looking Ahead: Expanding the Program

While the current version focuses on Linux, Hadoop, AWS, and Docker, I’m constantly exploring new ways to enhance it. Future updates might include:

- Kubernetes Integration: Automate container orchestration.

- Database Management: Simplify operations for MySQL, PostgreSQL, and more.

- Monitoring Tools: Add options for logging and performance monitoring.

Conclusion

In a world where time is the most valuable resource, automation is your best ally. Whether you’re managing cloud servers, running containers, or analyzing big data, this menu-driven program can transform how you work.

Don’t just dream about making life easier start building tools that do the work for you. If I could simplify these technologies into a single Python script, imagine what you could create!

What would you automate? Share your ideas in the comments below!